The fall of Zavvi along with several other soon to be notable high street absentees and the troubles the banking system has been going through has got me thinking.

First I want to tackle failure of business processes. Zavvi's main reason for going out of business, according to the press was a domino affect from Woolworth's going into administration. Zavvi relied heavily on Woolworth's wholesale as a source of DVDs and CDs. From the outside in, it seems Zavvi operated with wholesale distributors of goods rather than dealing directly with manufactures. There's nothing wrong with that of course, however, if you deal with a middle man who goes out of business than you're left scrambling looking for a replacement at the same costs. No doubt, if you have one middle man, you've probably aligned prices closely (albeit probably a given percentage above) the distributor.

Why wasn't this adequately highlighted as a risk? Why only have one supplier? If you must have one supplier, at least have a good idea of alternatives at the drop of a hat if that risk is realised.

This comes nicely into my second point, it almost seems that Zavvi have suffered a misapplication of the Toyota Production System in that they pulled stock into their store as to minimise inventory, and therefore waste. Keeping the time between ordering from the wholesaler to getting into the customers hands is a worthy goal to aim for. After all, unsold stock is not money in the bank. But again, this raises the risk of only having one core supplier.

Moving onto Woolworth's itself, I find myself asking what Woolworth's actually sells and the only answer I can fathom is that it's a general store. They never specialised in anything, meaning that they never had the buying power to lower their prices and pass the savings onto their customers leaving them with a breadth of stock. In my opinion that stock wasn't a breadth of high end stock, it was a breadth of medium to low end goods, odds and ends and overly priced electrical/entertainment goods. If I needed a new set of tea towels, brilliant, but for the floorspace they wouldn't be able to justify just selling bits and bobs like that.

This leaves me asking, who are your customers, and what differentiates you from your competitors?

Finally, how can you expect to survive charging the prices both Zavvi and Woolworth's seemed happy to charge? In both stores I could find a product, say a DVD, from a fairly well known internet only business, play.com, cheaper that wasn't even on sale than on the highsteet in post Christmas/liquidation sales. Play.com does advertise nationally on TV, radio and in print, and although I won't argue the brand awareness between Woolworths and Play.com, it's not like people couldn't find Play.com.

I guess after all is said and done, as a business you need to know your customers, know your competitors, know your business processes and understand your risks and how to mitigate those risks. On top of that, I'd like to add you should always be asking these questions, always looking for improvements and changes.

Just look at Tesco, boy have they changed! They seem to know what their customers want, and are constantly changing, adding new product lines in store and online.

Saturday, December 27, 2008

Saturday, December 20, 2008

Doing the Right thing vs Doing it right

There was one lightening talk at XPDay that has stuck in my head, and I want to try to reproduce the discussion here. I'm sure I'll get some details wrong, but you'll just have to bare with me. The talk was on doing the right thing verses doing it right.

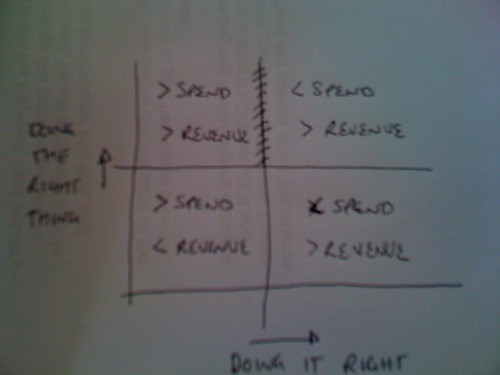

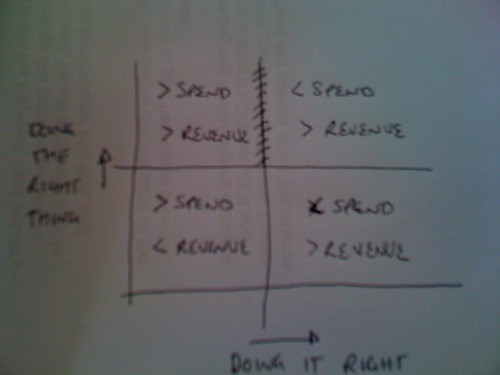

If you look at the image below, you'll see an XY graph, along the Y axis is doing the right thing, i.e. building the right solution. Along the X axis is doing it right, i.e. building the solution well. There was some studies into these metrics, but I don't know what it is, so I'm just going to use pseudo numbers.

In the lower left quadrant, you'll see doing the wrong thing, and doing it badly. The cost of the project is greater than the average IT project, and the revenue is lower than expected. In the upper left quadrant your doing the right thing, but you're doing it wrong. Your costs are still larger than average but you are making more revenue as you're building the required product. In the bottom right you're doing the wrong thing, but you're doing it well so your costs are lower than average, but your revenue isn't as good as expected. In the top right, you're doing the right thing and you're doing it well. Your costs are lower than average, and your revenue is higher than expected.

Now, of course, this is abstract and to be taken with a large pinch of salt, but there are some interesting deductions to be made. Firstly, we were told that according to the study, it's near impossible to go from building the right thing badly to building the right thing well. Looking at the rest, it seems intuitive that if you were doing the wrong thing badly, you'd want to do the right thing badly before doing the right thing well, but in actual fact, it's less costly and more efficient to do the wrong thing well and progress to building the right thing well.

So, where's your project on the quadrant, where do you want to be and how to you want to get there?

If you look at the image below, you'll see an XY graph, along the Y axis is doing the right thing, i.e. building the right solution. Along the X axis is doing it right, i.e. building the solution well. There was some studies into these metrics, but I don't know what it is, so I'm just going to use pseudo numbers.

In the lower left quadrant, you'll see doing the wrong thing, and doing it badly. The cost of the project is greater than the average IT project, and the revenue is lower than expected. In the upper left quadrant your doing the right thing, but you're doing it wrong. Your costs are still larger than average but you are making more revenue as you're building the required product. In the bottom right you're doing the wrong thing, but you're doing it well so your costs are lower than average, but your revenue isn't as good as expected. In the top right, you're doing the right thing and you're doing it well. Your costs are lower than average, and your revenue is higher than expected.

Now, of course, this is abstract and to be taken with a large pinch of salt, but there are some interesting deductions to be made. Firstly, we were told that according to the study, it's near impossible to go from building the right thing badly to building the right thing well. Looking at the rest, it seems intuitive that if you were doing the wrong thing badly, you'd want to do the right thing badly before doing the right thing well, but in actual fact, it's less costly and more efficient to do the wrong thing well and progress to building the right thing well.

So, where's your project on the quadrant, where do you want to be and how to you want to get there?

Software Development: By Way

I tend to think the way libraries and frameworks are made could easily be applied to teams and projects. When building an application, generally you focus on solving the business problem with the best tools available. When you move onto the next problem, you might have that eureka moment where you find that there are sub parts of the new problem which overlaps with the previous problem. The fact that you've already solved this problem, means you'd be silly not to reuse that. Thus, you have identified a common functionality, and if you extract that into a new library, you have reusable code across more than one project. Brilliant.

The other way that you'd come across common functionality is through conversation. In true water cooler mannerisms, this will often be coincidental, but again, if you can identify commonality and extract that, you are deriving business value. Not only are you reusing something, meaning time can be saved and all teams benefit from faster delivery, but with more eyes on the same code, the chances of that becoming more robust grow.

So far we mentioned code, but this could just as easily be process and tools, like build scripts, deployment practices, server provisioning and lots more too. The key is to make discovery, and sharing easy. I think the key here is relationships and communication.

I think this model can be applied to projects too, where new projects are spawned to develop/manage commonality, this team doesn't necessarily have to consist of full time staff, it could be part time or ad-hoc, but there should always be a project owner/lead even if the project is sleeping. Anyone should feel they can contribute to the project, for the benefit of all the teams, even if there are full timers on the project.

I think, over time, this will form a network of loosely joined teams surrounding a handful of core projects, almost like atoms around a nucleus. I see these core projects being things like:

* build scripts, including testing tools and reporting

* logging and monitoring scripts

* deployment processes

* network and hardware management and provisioning

* data access layers (especially if all projects share common relations like customers)

* service access layers, for accessing hosted services

* user interface asset management

* hosted tools support, e.g. source control, software repositories, wikis, mailing lists, story tracking, continuous integration boxes etc

There's probably a bunch more too. But as I mentioned earlier, relationships are probably the most important aspect here. Establishing a community between the project leads and another for the wider developer group across all the projects will help, but you have to support the community, let it flourish. The more the community collaborates and communicates the more they'll drive towards commonality and reuse. Of course, people will have different ideas and prefer different tools, and that should be supported as long as the principle of spinning out or contributing to projects carries on.

The other way that you'd come across common functionality is through conversation. In true water cooler mannerisms, this will often be coincidental, but again, if you can identify commonality and extract that, you are deriving business value. Not only are you reusing something, meaning time can be saved and all teams benefit from faster delivery, but with more eyes on the same code, the chances of that becoming more robust grow.

So far we mentioned code, but this could just as easily be process and tools, like build scripts, deployment practices, server provisioning and lots more too. The key is to make discovery, and sharing easy. I think the key here is relationships and communication.

I think this model can be applied to projects too, where new projects are spawned to develop/manage commonality, this team doesn't necessarily have to consist of full time staff, it could be part time or ad-hoc, but there should always be a project owner/lead even if the project is sleeping. Anyone should feel they can contribute to the project, for the benefit of all the teams, even if there are full timers on the project.

I think, over time, this will form a network of loosely joined teams surrounding a handful of core projects, almost like atoms around a nucleus. I see these core projects being things like:

* build scripts, including testing tools and reporting

* logging and monitoring scripts

* deployment processes

* network and hardware management and provisioning

* data access layers (especially if all projects share common relations like customers)

* service access layers, for accessing hosted services

* user interface asset management

* hosted tools support, e.g. source control, software repositories, wikis, mailing lists, story tracking, continuous integration boxes etc

There's probably a bunch more too. But as I mentioned earlier, relationships are probably the most important aspect here. Establishing a community between the project leads and another for the wider developer group across all the projects will help, but you have to support the community, let it flourish. The more the community collaborates and communicates the more they'll drive towards commonality and reuse. Of course, people will have different ideas and prefer different tools, and that should be supported as long as the principle of spinning out or contributing to projects carries on.

Monday, December 15, 2008

XPDay Review

On Thursday and Friday of last week I showed up at XPDay, and attended some cool sessions including:

Nat Pryce on TDD of async code: http://www.natpryce.com/articles/000755.html

Matt Wynne on Lean Engineering: http://blog.mattwynne.net/2008/12/14/slides-from-xp-day-talk/

Had some interesting discussions with some cool people including:

http://gojko.net/tag/xpday08/

So, I was interested in Gojko Anzic's open space session on the new Fitnesse SLIM implemenation. It looks like it's much, much easier to wrap domain objects instead of creating fixture classes for testing. It's not quite ready yet, but I'm looking forward to a stable release.

There was also an interesting keynote from Marc Baker of LeanUK.org (http://www.leanuk.org/pages/about_team_marc_baker.htm) on his experiences of introducing lean principles into the NHS.

Nat Pryce on TDD of async code: http://www.natpryce.com/articles/000755.html

Matt Wynne on Lean Engineering: http://blog.mattwynne.net/2008/12/14/slides-from-xp-day-talk/

Had some interesting discussions with some cool people including:

http://gojko.net/tag/xpday08/

So, I was interested in Gojko Anzic's open space session on the new Fitnesse SLIM implemenation. It looks like it's much, much easier to wrap domain objects instead of creating fixture classes for testing. It's not quite ready yet, but I'm looking forward to a stable release.

There was also an interesting keynote from Marc Baker of LeanUK.org (http://www.leanuk.org/pages/about_team_marc_baker.htm) on his experiences of introducing lean principles into the NHS.

Tuesday, December 09, 2008

Friday, November 28, 2008

Why I'm begining to dislike frameworks

I've been having discussions with David and Dr Pep over the last few days about the pros and cons of development frameworks. This is probably a measure a my increasing level of cynisium but I found myself looking at a framework that T4 was looking at and thought to myself "what is this framework going to stop me doing?"

David raises the point of the productivity curve; in most cases when picking up a new library or framework doing the 'hello world' stuff is easy and your productivity is high. As you come across issues or want to solve more complicated puzzles your productivity drops as you struggle with the design choices of others.

I think one way in which these frameworks can be assessed is asking how much will it cost you to remove it? We're currently using Symfony, a PHP MVC framework, and it follows this pattern exactly. It's easy to get started, and doing easy things is easy, but when we want to stray off the beaten path, we get beaten ourselves. The documentation is sparce, and if we decided to use something other that Symfony, we might as well re-write our project.

Now, I'm not trying to start a Symfony bashing dialog, I'm just using it as an example. In fact, Milan makes the point that this particular framework is Open Source, and if we have problems, we should commit something back to the project. Which is the right way to view this.

It still doesn't stop me from having second thoughts about using a framework again. Give me swapable libraries everytime.

David raises the point of the productivity curve; in most cases when picking up a new library or framework doing the 'hello world' stuff is easy and your productivity is high. As you come across issues or want to solve more complicated puzzles your productivity drops as you struggle with the design choices of others.

I think one way in which these frameworks can be assessed is asking how much will it cost you to remove it? We're currently using Symfony, a PHP MVC framework, and it follows this pattern exactly. It's easy to get started, and doing easy things is easy, but when we want to stray off the beaten path, we get beaten ourselves. The documentation is sparce, and if we decided to use something other that Symfony, we might as well re-write our project.

Now, I'm not trying to start a Symfony bashing dialog, I'm just using it as an example. In fact, Milan makes the point that this particular framework is Open Source, and if we have problems, we should commit something back to the project. Which is the right way to view this.

It still doesn't stop me from having second thoughts about using a framework again. Give me swapable libraries everytime.

Thursday, November 13, 2008

How Sainsburys are Coming into the 21st Century

I was in my local Sainsburys the other day, doing my shopping (what else?). As I was at the checkout I noticed a few things.

First, you've probably noticed at the checkout gift cards for iTunes, or something similar, however what I'd noticed this time was what I want to call a "student food card". This is a two part card, one for topping up the account, one for using credit from the account. This is plainly aimed at the concerned parent who wants to make sure their kids are eating properly whilst at university. Those parents who do not want to put money into their kids account so they can spend it on nights out and pot noddles for dinner (though the flaw here being that Sainsburys sell booze too, but we'll brush over that). I did think though that this could be used the other way round too, what about someone with an aging parent or perhaps a lowly paid sibling. Wouldn't you want to make sure they were eating properly too?

So, I thought that was cool, but there was a little more to come.

Second, there were no bags at the checkout, I told the cashier who told me they'd stopped putting bags out. She pointed me to a sign which I'd ignored as an 'out of bags' sign, saying as much. Sainsburys in environmental awareness are trying to get their customers to reuse their bags, so you now have to ask for carrier bags. They've given store points away for ages for reusing bags but obviously isn't having the desired affect.

One of the things they're doing to help their customers is providing a free SMS reminder service. You send a message saying when you usually go shopping and it sends you a reminder a few hours before, saying not to forget your bags.

This could be a tentative first step, but I can see much more here. They're only providing this until the end of the year, but how about linking this to my store points card and instead of charging me for SMS, remove some of those points. Also, by combining your mobile to your store card just think of the data mining possibilities. You wouldn't have to tell them when you go shopping they have that data. Also, what better direct marketing would you like? Sainsburys have about ten years data on me, they have a pretty good idea what I buy and when and could send me special offers just for me, with some kind of code that a cashier could enter.

We'll see what happens. It's an odd combination of convenience and scariness.

First, you've probably noticed at the checkout gift cards for iTunes, or something similar, however what I'd noticed this time was what I want to call a "student food card". This is a two part card, one for topping up the account, one for using credit from the account. This is plainly aimed at the concerned parent who wants to make sure their kids are eating properly whilst at university. Those parents who do not want to put money into their kids account so they can spend it on nights out and pot noddles for dinner (though the flaw here being that Sainsburys sell booze too, but we'll brush over that). I did think though that this could be used the other way round too, what about someone with an aging parent or perhaps a lowly paid sibling. Wouldn't you want to make sure they were eating properly too?

So, I thought that was cool, but there was a little more to come.

Second, there were no bags at the checkout, I told the cashier who told me they'd stopped putting bags out. She pointed me to a sign which I'd ignored as an 'out of bags' sign, saying as much. Sainsburys in environmental awareness are trying to get their customers to reuse their bags, so you now have to ask for carrier bags. They've given store points away for ages for reusing bags but obviously isn't having the desired affect.

One of the things they're doing to help their customers is providing a free SMS reminder service. You send a message saying when you usually go shopping and it sends you a reminder a few hours before, saying not to forget your bags.

This could be a tentative first step, but I can see much more here. They're only providing this until the end of the year, but how about linking this to my store points card and instead of charging me for SMS, remove some of those points. Also, by combining your mobile to your store card just think of the data mining possibilities. You wouldn't have to tell them when you go shopping they have that data. Also, what better direct marketing would you like? Sainsburys have about ten years data on me, they have a pretty good idea what I buy and when and could send me special offers just for me, with some kind of code that a cashier could enter.

We'll see what happens. It's an odd combination of convenience and scariness.

Tuesday, October 28, 2008

Agile Software Development and Black Swans

Throughout reading the excellent book, Black Swan, by Nassim Nicolas Taleb, I was trying to think of scenarios and examples I that I've seen that I could apply to what Taleb was talking about. Although I don't really follow economics, I did find myself thinking about agile software development.

I don't want to go into what Black Swans are, other than to say they represent a highly improbable event, if you want to know more, pick up a copy of the book. Essentially, Taleb says that when a Black Swan occurs the affects can be disastrous because they are often ignored when calculating risks. Of course, many Black Swans are unknown unknowns, so how can you measure something like that? Well, you can't, but as the saying goes, "it's better to be broadly right then precisely wrong".

So, how does this apply to agile? Well, the way I've practiced it, it's about drilling out uncertainty by planning a prioritised work stack for a limited scope (generally 3 months). This minimises risk by only addressing immediate and near term issues, allowing a project to be flexible (think broadly right rather than precisely wrong). The daily stand ups, acceptance demos and retrospectives are all forums for identifying risk early in the hope of lowering the overall risk to a project delivery. Finally, and above all, agile and it's practices of test driven design, continuous integration and burn down charts display empirical evidence of delivery, rather than anecdotal.

In todays software world where it's acceptable to delivery functional, if not complete, applications into service, why wouldn't you want to work with a limited prioritised stack with empirical evidence to demonstrate readiness?

I don't want to go into what Black Swans are, other than to say they represent a highly improbable event, if you want to know more, pick up a copy of the book. Essentially, Taleb says that when a Black Swan occurs the affects can be disastrous because they are often ignored when calculating risks. Of course, many Black Swans are unknown unknowns, so how can you measure something like that? Well, you can't, but as the saying goes, "it's better to be broadly right then precisely wrong".

So, how does this apply to agile? Well, the way I've practiced it, it's about drilling out uncertainty by planning a prioritised work stack for a limited scope (generally 3 months). This minimises risk by only addressing immediate and near term issues, allowing a project to be flexible (think broadly right rather than precisely wrong). The daily stand ups, acceptance demos and retrospectives are all forums for identifying risk early in the hope of lowering the overall risk to a project delivery. Finally, and above all, agile and it's practices of test driven design, continuous integration and burn down charts display empirical evidence of delivery, rather than anecdotal.

In todays software world where it's acceptable to delivery functional, if not complete, applications into service, why wouldn't you want to work with a limited prioritised stack with empirical evidence to demonstrate readiness?

Tuesday, September 16, 2008

Re-Developing an Application using TDD against an Establised API

The last few months have provided an interesting experiment to test our application testing. For several reasons that I won't go into, we decided to rebuild one of our web services, and we made the decision to try and keep as many of the tests we had as possible. We found out quite quickly that moving unit tests to a new project when you're redesigning a system just isn't feasible. Our unit tests were too tightly tied to the design (well, that's the point of test driven design right?). However, our acceptance tests which tests our API as a black box was a perfect candidate to measure our progress.

It turned out to be more useful then we'd realised. When you have working black box tests, you can do whatever you want to the internals of the application as you already have appropriate tests to validate the application. This is exactly what we did.

We could happily start turning on our acceptance tests as and when we felt a feature was implemented. By insisting that we should not change these tests, it gives a an excellent benchmark for completeness. Of course, this is quite a rare occurrence, but it goes to show that if you test at different layers of your application and regard APIs or interfaces as unchangeable, you can re-implement to your hearts content.

It turned out to be more useful then we'd realised. When you have working black box tests, you can do whatever you want to the internals of the application as you already have appropriate tests to validate the application. This is exactly what we did.

We could happily start turning on our acceptance tests as and when we felt a feature was implemented. By insisting that we should not change these tests, it gives a an excellent benchmark for completeness. Of course, this is quite a rare occurrence, but it goes to show that if you test at different layers of your application and regard APIs or interfaces as unchangeable, you can re-implement to your hearts content.

Wednesday, September 10, 2008

Convergence, Divergence, Convience and Quality

When I got my first job, I was really keen to get a great stereo. At the time the thinking was that to get quality music playback, hi-fi separates ware the way forward. Each component was stand alone, loosely joined by standard interconnects (phono, coaxial or fibre optics), and was easily replaced, upgraded or repaired without affecting the rest of the product. This was opposed to a hi-fi that when, say the CD player breaks, you need to replace the whole damm thing.

Now the trend is convergence, as Dr. Pep mentions, I have been tempting him, and myself, into buying an iPhone. The obvious comparison is the iPhone is convergence personified. It's a mobile phone, a 3G device, it's wifi enabled, a MP3 player, a GPS receiver and a pseudo computer that fits in your pocket.

What happens if one of those features breaks, or you need to change the battery? Well, it seems your in trouble. But that's the price for convenience. The alternative is a mobile phone (with or without 3G access), a laptop with wifi and a 3G dongle, a MP3 player and a GPS receiver. You can of course get other devices than the iPhone that do many of these things, but let's face it, they not an iPhone.

So here's the dilemma, I want to upgrade/get new features like GPS and 3G access. But I already have an iPod, a mobile phone and a wifi enabled laptop. If I were to buy the iPhone, do I throw this all away? If so, it becomes an expensive upgrade.

Does it follow that, more convergence means less devices, means more time on a single device, means wearing that divice out sooner, means expensive upgrades more often? How does having one be and end all device affect my battery life, I don't want to be have to plug something in every four hours.

Now the trend is convergence, as Dr. Pep mentions, I have been tempting him, and myself, into buying an iPhone. The obvious comparison is the iPhone is convergence personified. It's a mobile phone, a 3G device, it's wifi enabled, a MP3 player, a GPS receiver and a pseudo computer that fits in your pocket.

What happens if one of those features breaks, or you need to change the battery? Well, it seems your in trouble. But that's the price for convenience. The alternative is a mobile phone (with or without 3G access), a laptop with wifi and a 3G dongle, a MP3 player and a GPS receiver. You can of course get other devices than the iPhone that do many of these things, but let's face it, they not an iPhone.

So here's the dilemma, I want to upgrade/get new features like GPS and 3G access. But I already have an iPod, a mobile phone and a wifi enabled laptop. If I were to buy the iPhone, do I throw this all away? If so, it becomes an expensive upgrade.

Does it follow that, more convergence means less devices, means more time on a single device, means wearing that divice out sooner, means expensive upgrades more often? How does having one be and end all device affect my battery life, I don't want to be have to plug something in every four hours.

Wednesday, August 13, 2008

Pushing the Boundaries of Testing and Continuous Integration

At Agile2008, I had the opportunity to present an experience report with two colleague's Raghav and Fabrizio about bringing robustness and performance testing into your development cycle and continuous build. Below is the video, along with the slides. Enjoy.

The submission can also be read here.

Pushing the Boundaries of Testing and Continuous Integration from Robbie Clutton on Vimeo

The submission can also be read here.

Pushing the Boundaries of Testing and Continuous Integration from Robbie Clutton on Vimeo

Sunday, August 03, 2008

Enterprise Agile vs Start Up Agile

Discussions in the office typically bring interesting conversation, and as I head to Agile2008 (I sit on the flight whilst writing this), I wanted to ask what's the difference between enterprise agile and start up agile, and how does that difference affect not just the maintainability of the products build, but also the innovation of the respective engineering group?

There is always the classic trade off between feature delivery and quality, but is it really the case that startups are generally exempt from these quality metrics? If this is the case, what other practices can be put in place instead of code metrics?

One of my memorably quotes from high school was my physics teacher, Mr Shave. He said to the class 'you can never prove anything, you can only disprove something'. This applies here with quality metrics. Code coverage, convention and duplication don't prove quality in code, even intergration testing or robustness and performance testing do not prove anything, they only dis-prove that your system doesn't fail under certain conditions. However, all of these steps do increase confidence in a product, and that is an integral part of any product development (confidence that is).

You can still not do any of this and be agile right? These practices are generally incoraged, but agile is about customer engagement and acceptance and if you're customer knows what they're getting and they accept your stories then it's OK right? What is enterprise agile anyway? Do enterprise developers build a product, deliver it into an in-life team and then move on? Does that require higher confidence as you're handing over? Is a start up developer likely to support that product in-life and as such be more likely to stick around, fix issues and add functionality?

There is always the classic trade off between feature delivery and quality, but is it really the case that startups are generally exempt from these quality metrics? If this is the case, what other practices can be put in place instead of code metrics?

One of my memorably quotes from high school was my physics teacher, Mr Shave. He said to the class 'you can never prove anything, you can only disprove something'. This applies here with quality metrics. Code coverage, convention and duplication don't prove quality in code, even intergration testing or robustness and performance testing do not prove anything, they only dis-prove that your system doesn't fail under certain conditions. However, all of these steps do increase confidence in a product, and that is an integral part of any product development (confidence that is).

You can still not do any of this and be agile right? These practices are generally incoraged, but agile is about customer engagement and acceptance and if you're customer knows what they're getting and they accept your stories then it's OK right? What is enterprise agile anyway? Do enterprise developers build a product, deliver it into an in-life team and then move on? Does that require higher confidence as you're handing over? Is a start up developer likely to support that product in-life and as such be more likely to stick around, fix issues and add functionality?

GMail Log4J Appender

Application logging is vital for diagnostics on a server product, but there can be so much, how can you tell what to watch or follow? Through tools like Log4J, you can have separate logs for different levels (typically debug, info, error and fatal). In Log4J, these are called log appenders, they can be anything from console or file based, or custom log appenders. One of the custom appenders I've been playing with recently is email logging. Our team has some excellent scripts written by Senor DB which scan log files for patterns and send email reports based on it's findings. I wanted to see if I could add application logging directly into the application. I wanted an email based appender and here's how I got that.

Now, there is an SMTPAppender in the Log4J package, but I wanted to use GMail, and the Log4J appender doesn't quite set up properly for GMail. I used Spring implementation of JavaMail for sending emails and extended the SMTPAppender in the log4J package. The method you'll be most interested in overriding is 'append', this gets called when your log is called into action depending on the settings in your log4j configuration.

For brevity, I've used the constructor to build the notifier (JavaMailSenderImpl from Spring) with desired properties, you can just as easily use properties in the log4j config, and I'll do just that for the host name (which for me means which IP address the application is running on). You can see above we've set the STMP host for Gmail with the port, email address and password for the email to be sent from. The little extra bit of magic is the properties 'mail.smtp.auth' and 'mail.smpt.starttls.enable'. The last is to get the default machine name.

Using the SimpleMailMessage (again from Spring), we add the text, the address and subject. The parts to grasp here are the layout and the stack trace. The layout is derived from your configuration, this is where you'd typically set the log message/heading including timestamp, thread name and other supporting information. Passing the event to the 'getLayout().format(event)' method returns this nicely formatted string. Then to get the stack trace we grab the array (each element being a line in the stack trace) from the event throwable information method. Someone thought that a method name indicating that your getting a string should pass back that array instead of adding new line characters, so we have to fix that before setting the text as the message body. Finally, we construct a useful subject containing the log level and host/IP address.

I've mentioned the configuration a few times, and here is the basic one comprising the full path to the class, the threshold (meaning the lowest level to log at, I don't want an email for every debug log entry after all). There's the pattern I mentioned before, this pattern includes the date, threadname, level name, class, method and line number, then the message and a new line. Finally there's the host name. Each instance of my application has a different name to identify it, typically something development, staging, production. To enable that property in the class, simply add a Spring like named setter:

Properties like this can be set if you want to have the username/password of your GMail account configurable or anything else for that matter, just don't forget to set it on the JavaMailSenderImpl object before sending the email in the append method.

Now, there is an SMTPAppender in the Log4J package, but I wanted to use GMail, and the Log4J appender doesn't quite set up properly for GMail. I used Spring implementation of JavaMail for sending emails and extended the SMTPAppender in the log4J package. The method you'll be most interested in overriding is 'append', this gets called when your log is called into action depending on the settings in your log4j configuration.

public GmailAppender() {

super();

notifier = new JavaMailSenderImpl();

notifier.setHost("smtp.gmail.com");

notifier.setPort(587);

notifier.setUsername(yourGmailEmailAddress);

notifier.setPassword(yourGmailEmailPassword);

Properties props = new Properties();

props.setProperty("mail.smtp.auth", "true");

props.setProperty("mail.smtp.starttls.enable", "true");

notifier.setJavaMailProperties(props);

try {

InetAddress addr = InetAddress.getLocalHost();

hostname = addr.getHostName();

} catch (UnknownHostException e) {

}

}

For brevity, I've used the constructor to build the notifier (JavaMailSenderImpl from Spring) with desired properties, you can just as easily use properties in the log4j config, and I'll do just that for the host name (which for me means which IP address the application is running on). You can see above we've set the STMP host for Gmail with the port, email address and password for the email to be sent from. The little extra bit of magic is the properties 'mail.smtp.auth' and 'mail.smpt.starttls.enable'. The last is to get the default machine name.

@Override

public void append(LoggingEvent event) {

super.append(event);

SimpleMailMessage message = new SimpleMailMessage();

StringBuilder builder = new StringBuilder();

builder.append(getLayout().format(event));

String[] stackTrace = event.getThrowableInformation().getThrowableStrRep();

for(int i = 0; i < stackTrace.length; i++)

builder.append(stackTrace[i] + "\n");

message.setText(builder.toString());

message.setTo(emailAddress);

message.setSubject(event.getLevel().toString() + " on host " + hostname);

try{

notifier.send(message);

} catch (MailException ex){

}

}

Using the SimpleMailMessage (again from Spring), we add the text, the address and subject. The parts to grasp here are the layout and the stack trace. The layout is derived from your configuration, this is where you'd typically set the log message/heading including timestamp, thread name and other supporting information. Passing the event to the 'getLayout().format(event)' method returns this nicely formatted string. Then to get the stack trace we grab the array (each element being a line in the stack trace) from the event throwable information method. Someone thought that a method name indicating that your getting a string should pass back that array instead of adding new line characters, so we have to fix that before setting the text as the message body. Finally, we construct a useful subject containing the log level and host/IP address.

log4j.appender.mail=com.iclutton.GmailAppender

log4j.appender.mail.Threshold=ERROR

log4j.appender.mail.layout=org.apache.log4j.PatternLayout

log4j.appender.mail.layout.ConversionPattern=%d{ISO8601} [%t] %-5p [%C{1}.%M() %L] - %m%n

log4j.appender.mail.hostname=important-live-server

I've mentioned the configuration a few times, and here is the basic one comprising the full path to the class, the threshold (meaning the lowest level to log at, I don't want an email for every debug log entry after all). There's the pattern I mentioned before, this pattern includes the date, threadname, level name, class, method and line number, then the message and a new line. Finally there's the host name. Each instance of my application has a different name to identify it, typically something development, staging, production. To enable that property in the class, simply add a Spring like named setter:

public void setHostname(String hostname){

this.hostname = hostname;

}

Properties like this can be set if you want to have the username/password of your GMail account configurable or anything else for that matter, just don't forget to set it on the JavaMailSenderImpl object before sending the email in the append method.

Monday, July 14, 2008

Some Guiding Principles for Supporting Production Apps

I just finished a two week rotation supporting our production web services and wanted to jot down some thoughts. This coincided with being sent a link to a Scott Hanselman blog post about guiding principles of software development which really enjoyed reading. I thought I'd extend a few of those now I've come out of my support rotation an enlightened developer (I hope).

Although Scott's blog is for Windows/.NET development, there's lots of goodies in there which can be applied to any language or ported to any language. I'd like to narrow in on the support based principles: tracing (logging) and error handling.

Scott mentions that for critical/error/warn logging, your audience is not a developer. Well in this case, I am, however, I may not know the application, so I need as much information as I can get. This leads me onto my first extension; transaction IDs.

Now, there's two kinds of transaction IDs really, especially when dealing with a service based application. One is every invocation of your service should be given a unique ID and that should be passed around the code (and logged against) until the path returns to the client. Depending on your application, this could get really noisy, but a multi-threaded application could benefit from such information. Second is a resource/task based ID, if a service does something (let's call it A), but the execution path returns to the client before A is complete, the ID for A should be returned to the client and this ID should live through the execution of A and stored or achieved accordingly. In terms of logging, when ever you come across this ID, use it. This generally follows for all IDs, if you have an ID, use it when logging.

With error handling, it's accepted that catching a generic exception is bad, although permissible if at the boundary of your application. If you must catch 'Exception' or 'RuntimeException', log it and use at least a error condition. It's a generic exception for a reason and that reason is you don't know what happened.

AOP is a great technique for logging, don't be frightened (and this goes with all logging) to use it everywhere.

Moving onto a topic glanced upon, but not thoroughly explored; configuration. If building a server product, config is your friend. When things go bad and you need to re-wire your application, not having to do a code change is a massive benefit. If the config is dynamically read, that's even better as it won't require a restart to the server.

Lastly, think about your archive mechanism and think about what data you'll need to preserve and for how long. Ideally, from that data, execution paths should be interpretable, at least at a high level.

Oh, and please be kind to your support team, make it easy for them to gather information about your application. If an application is one of many, consider implementing a common health/management (and provisioning if you can) interface.

I think putting together a list of principles like this is a great idea, each engineering group should think about the ways they work and how to drive commonality across products. Teams shouldn't be scared of thinking about support throughout the development process either, although they've been hearing the same thing about security for years ;)

Although Scott's blog is for Windows/.NET development, there's lots of goodies in there which can be applied to any language or ported to any language. I'd like to narrow in on the support based principles: tracing (logging) and error handling.

Scott mentions that for critical/error/warn logging, your audience is not a developer. Well in this case, I am, however, I may not know the application, so I need as much information as I can get. This leads me onto my first extension; transaction IDs.

Now, there's two kinds of transaction IDs really, especially when dealing with a service based application. One is every invocation of your service should be given a unique ID and that should be passed around the code (and logged against) until the path returns to the client. Depending on your application, this could get really noisy, but a multi-threaded application could benefit from such information. Second is a resource/task based ID, if a service does something (let's call it A), but the execution path returns to the client before A is complete, the ID for A should be returned to the client and this ID should live through the execution of A and stored or achieved accordingly. In terms of logging, when ever you come across this ID, use it. This generally follows for all IDs, if you have an ID, use it when logging.

With error handling, it's accepted that catching a generic exception is bad, although permissible if at the boundary of your application. If you must catch 'Exception' or 'RuntimeException', log it and use at least a error condition. It's a generic exception for a reason and that reason is you don't know what happened.

AOP is a great technique for logging, don't be frightened (and this goes with all logging) to use it everywhere.

Moving onto a topic glanced upon, but not thoroughly explored; configuration. If building a server product, config is your friend. When things go bad and you need to re-wire your application, not having to do a code change is a massive benefit. If the config is dynamically read, that's even better as it won't require a restart to the server.

Lastly, think about your archive mechanism and think about what data you'll need to preserve and for how long. Ideally, from that data, execution paths should be interpretable, at least at a high level.

Oh, and please be kind to your support team, make it easy for them to gather information about your application. If an application is one of many, consider implementing a common health/management (and provisioning if you can) interface.

I think putting together a list of principles like this is a great idea, each engineering group should think about the ways they work and how to drive commonality across products. Teams shouldn't be scared of thinking about support throughout the development process either, although they've been hearing the same thing about security for years ;)

Labels:

AOP,

development,

logging,

scott hansleman,

support

Tuesday, July 08, 2008

Scott Hansleman podcast with the Poppendieck's

A great podcast which deserves it's own post to comment on it. Mary Poppendieck really came out with some cracking soundbites including:

* product teams should be driven by profit and loss, not by on-time, on-budget, in-scope, customer satisfaction and quality.

* engineering and business - it's not them and us, it should be all of us

* no excuse for IT people to be separate (organisational) from the business if IT

* If IT it routine (email etc), outsourcing is fine, how innovate can your email be? If building core competencies, outsourcing is generally a bad idea

* leverage workers intelligence to deliver, management should be leaders, not micro managers

loads of good stuff, check it out!

* product teams should be driven by profit and loss, not by on-time, on-budget, in-scope, customer satisfaction and quality.

* engineering and business - it's not them and us, it should be all of us

* no excuse for IT people to be separate (organisational) from the business if IT

* If IT it routine (email etc), outsourcing is fine, how innovate can your email be? If building core competencies, outsourcing is generally a bad idea

* leverage workers intelligence to deliver, management should be leaders, not micro managers

loads of good stuff, check it out!

Labels:

Agile,

delivery,

hansleminutes,

poppendieck,

scott hansleman,

Software

Monday, July 07, 2008

A Day at OpenTech

I spent my Saturday at the OpenTech conference in London and it was a day well spent. I do have to learn to go and listen to new things though, although talks from Tom Morris (who I later met for the first time), Simon Willison and Gavin Bell were interesting, those were subjects I already knew about or were aware about to a fair degree. What did strike me however were some of the last sessions by Tom Loosemore and Chris Jackson.

Between them they told stories which included building a peer-to-peer TV network for persisted content, and a project to become 'the DNS for media'. I've always had an interest in home entertainment, and these talks made my eyes light up. I'm really pleased to see that Tom has posted his presentation.

Tom's 'impossibox' was capable of storing 1TBs worth of media data, roughly a months worth. If that were to be networked in a P2P fashion, you could have months of recorded TV, redundantly stored in every house on your street. I think this plays into the networks hands here as well. This isn't real time data and can be sync'd, or you can download a program or series at the networks leisure without breaking net neutrality. Throw in, if there were 100 such networked boxes with a mile's radius, chances are you could take care of the P2P sharing at the local exchange so you wouldn't even need to push large amounts of data all over the place.

Although this future is a 'slightly bonkers' version, it sparks the imagination. Just think of the social networking benefits of being able to capture this data, see friends recommendations, read comments, video mashups, trailers, directors (or other users) commentaries. So much potential.

Great talk.

Between them they told stories which included building a peer-to-peer TV network for persisted content, and a project to become 'the DNS for media'. I've always had an interest in home entertainment, and these talks made my eyes light up. I'm really pleased to see that Tom has posted his presentation.

Tom's 'impossibox' was capable of storing 1TBs worth of media data, roughly a months worth. If that were to be networked in a P2P fashion, you could have months of recorded TV, redundantly stored in every house on your street. I think this plays into the networks hands here as well. This isn't real time data and can be sync'd, or you can download a program or series at the networks leisure without breaking net neutrality. Throw in, if there were 100 such networked boxes with a mile's radius, chances are you could take care of the P2P sharing at the local exchange so you wouldn't even need to push large amounts of data all over the place.

Although this future is a 'slightly bonkers' version, it sparks the imagination. Just think of the social networking benefits of being able to capture this data, see friends recommendations, read comments, video mashups, trailers, directors (or other users) commentaries. So much potential.

Great talk.

Labels:

chris jackson,

gavin bell,

impossibox,

opentec,

P2P,

simon willison,

social networks,

tom loosemore,

tom morris,

TV

Wednesday, July 02, 2008

Data, Data all around

I attended 2gether08 today for the afternoon and walked into a very interesting discussion on making data public. Now following the Free Our Data campaign from the Guardian I've had a keen interest on the subject. Many points were discussed from innovating on this newly available data, to cost savings for doing so through to privacy issues surrounding private data (such as medical records) and data which people seem to give away freely through social sites such as Facebook and finally data which people give away to serve a better experience (think supermarket club card, or Google search).

One of the interesting points raised was about who the public tend to put their trust in, and maybe not so surprisingly, it's not the government. There is a trend that people are giving or putting more data into corporate entities, be they your favoured supermarket, accountant or bank. An interesting point was raised by Paul Downey that although he does online banking, he doesn't have the same trust in Yahoo! to mashup his banking data through Pipes or some other such technology, this is mainly due to banks be regulated authorities.

The conversation moved on and touched upon escrow like accounts for personal data and this got me thinking about identity. As we move towards tools like OpenID and OAuth, the thought occurred to me, would you log into your bank with your OpenID? If your OpenID provider isn't regulated, or even in the same country as you, would you trust it enough with potential access to your online bank? Let me turn that question around a bit now, if you're OpenID provider was a regulated authority, perhaps the bank in question or even some government department - would that change your answer?

The final question left in my mind was, that with a few people thinking about decentralised escrow like data accounts, could you trust people to look after their own data? I know that when I carry my passport with me, I get slightly paranoid. I also know that after losing 2 or 3 hard discs over the last 10 years, I'm not sure I'd want that responsibility. What I do know is that I hate repeating myself, and if government bodies can share data easier between themselves, and if there's a way I can authorise companies to share my data in a way that makes my life easier to lead, I might just buy into that.

I suppose with the level of data that may become available, the responsibility may shift somewhat with who owns, maintains and secures that data.

One of the interesting points raised was about who the public tend to put their trust in, and maybe not so surprisingly, it's not the government. There is a trend that people are giving or putting more data into corporate entities, be they your favoured supermarket, accountant or bank. An interesting point was raised by Paul Downey that although he does online banking, he doesn't have the same trust in Yahoo! to mashup his banking data through Pipes or some other such technology, this is mainly due to banks be regulated authorities.

The conversation moved on and touched upon escrow like accounts for personal data and this got me thinking about identity. As we move towards tools like OpenID and OAuth, the thought occurred to me, would you log into your bank with your OpenID? If your OpenID provider isn't regulated, or even in the same country as you, would you trust it enough with potential access to your online bank? Let me turn that question around a bit now, if you're OpenID provider was a regulated authority, perhaps the bank in question or even some government department - would that change your answer?

The final question left in my mind was, that with a few people thinking about decentralised escrow like data accounts, could you trust people to look after their own data? I know that when I carry my passport with me, I get slightly paranoid. I also know that after losing 2 or 3 hard discs over the last 10 years, I'm not sure I'd want that responsibility. What I do know is that I hate repeating myself, and if government bodies can share data easier between themselves, and if there's a way I can authorise companies to share my data in a way that makes my life easier to lead, I might just buy into that.

I suppose with the level of data that may become available, the responsibility may shift somewhat with who owns, maintains and secures that data.

Labels:

data,

free our data,

openid,

paul downey,

privacy,

psd,

yahoo

Sunday, June 29, 2008

Confusion and Patience

I find it interesting that, as a developer, I tend to have very low patience for poor user experience. I sometimes feel stupid if I fail to use a piece of technology, though these are often kiosk based applications like checking in at an airport.

Then conversely, it seems that some people go out of the way to make something more complicated. Earlier today I renewed my Arsenal membership. There are several levels of membership and several variations of each.

* Junior

* Red

* Silver

* Gold

* Platinum

I just want the cheap option is which priority booking for match tickets, now there are four payment options.

* Lite

* Lite DD

* Full

* Full DD

I couldn't find the difference between Lite and Full, and the DD stands for Direct Debit, which is a payment option, surely not a membership option.

I just can't stand stuff like this, why do people go out of their way to make it harder for me to go about my day? Too many options are bad, make it clear what I'm getting. As for the crappy kiosk applications, I'm impatient and as such am likely to make mistakes, be forgiving and make it transparent what's going on.

I have to say, I think the London Underground have got it right with their ticket machines (though this may be as I'm used to travelling on the system - I actually found San Francisco's train network fairly confusing even though the premise is very simple). They have big colourful buttons on a touch screen, where I can easily search for the ticket I need for my journey.

Then conversely, it seems that some people go out of the way to make something more complicated. Earlier today I renewed my Arsenal membership. There are several levels of membership and several variations of each.

* Junior

* Red

* Silver

* Gold

* Platinum

I just want the cheap option is which priority booking for match tickets, now there are four payment options.

* Lite

* Lite DD

* Full

* Full DD

I couldn't find the difference between Lite and Full, and the DD stands for Direct Debit, which is a payment option, surely not a membership option.

I just can't stand stuff like this, why do people go out of their way to make it harder for me to go about my day? Too many options are bad, make it clear what I'm getting. As for the crappy kiosk applications, I'm impatient and as such am likely to make mistakes, be forgiving and make it transparent what's going on.

I have to say, I think the London Underground have got it right with their ticket machines (though this may be as I'm used to travelling on the system - I actually found San Francisco's train network fairly confusing even though the premise is very simple). They have big colourful buttons on a touch screen, where I can easily search for the ticket I need for my journey.

Wednesday, June 11, 2008

Bear vs Shark strikes again - TV Billboards

Driving to San Francisco today, I saw a disturbing site - a TV Billboard. This was not a revolving billboard with three rotating adverts, this was a twenty odd foot TV display on a major motorway.

I've always found billboards a distraction when driving, so I wonder how TV based versions will go down. I can just imagine these being indiscriminately targeted at their audience; coffee shops for morning commute, alcohol and restaurants for evening commutes, toys and games for the school runs and the list goes on.

It's just another step.

I've always found billboards a distraction when driving, so I wonder how TV based versions will go down. I can just imagine these being indiscriminately targeted at their audience; coffee shops for morning commute, alcohol and restaurants for evening commutes, toys and games for the school runs and the list goes on.

It's just another step.

Saturday, May 24, 2008

How .Net is becoming more like Java everyday

I've noticed that the .Net community appears to becoming more like Java everyday, and it's nothing to do with the language. I haven't built any commercial .Net applications in over a year, but I have been using Java a lot of the last 6 months or so. While I don't rate Java as a language above or below any others (they all have plus/minus points after all), the most powerful thing about Java , in my opinion, is the community.

The amount of tools around the language is amazing, with arguably the best product being the Spring Framework. What I've noticed in the .Net community is a movement they're calling Alt.Net, which is embracing broad terms like agile and open source. The way I see it is that, whatever you think of the .Net Framework, it'll never do everything that everyone wants, just like the Java runtime will never be. These are people who have ideas and are moving towards community based development and moving away from reliance on Mircosoft.

This moves the discussion on somewhat, with all the new languages available on top of Java and .Net, the next arguments won't be Java vs .Net, it'll be JRE (Java Runtime Environment) vs CLR/DLR (Common/Dynamic Language Runtime). Both have a Ruby implementation, JRuby on the JRE and IronRuby on the DLR among a whole bunch of others. The only thing holding .Net back is Linux deployment, but the Mono Project is trying to solve that problem, and as they come more mature, it'll be interesting to see what runtime people will be deploying on. Will the amount of runtimes get smaller while the choice of language on each grows? I'm not sure, but I'll be watching.

The amount of tools around the language is amazing, with arguably the best product being the Spring Framework. What I've noticed in the .Net community is a movement they're calling Alt.Net, which is embracing broad terms like agile and open source. The way I see it is that, whatever you think of the .Net Framework, it'll never do everything that everyone wants, just like the Java runtime will never be. These are people who have ideas and are moving towards community based development and moving away from reliance on Mircosoft.

This moves the discussion on somewhat, with all the new languages available on top of Java and .Net, the next arguments won't be Java vs .Net, it'll be JRE (Java Runtime Environment) vs CLR/DLR (Common/Dynamic Language Runtime). Both have a Ruby implementation, JRuby on the JRE and IronRuby on the DLR among a whole bunch of others. The only thing holding .Net back is Linux deployment, but the Mono Project is trying to solve that problem, and as they come more mature, it'll be interesting to see what runtime people will be deploying on. Will the amount of runtimes get smaller while the choice of language on each grows? I'm not sure, but I'll be watching.

Thursday, May 08, 2008

Latest Scores Makes The Guardian

I was randomly following up on one of my new followers for a latest score tweeter when I found this link to the Guardian: http://www.guardian.co.uk/technology/2008/may/08/socialnetworking.twitter

Towards the bottom under miscellaneous tools is a link off to http://www.code.google.com/p/latestscorestwitter

AWESOME!

Towards the bottom under miscellaneous tools is a link off to http://www.code.google.com/p/latestscorestwitter

AWESOME!

Wednesday, April 30, 2008

Open Sourcing Twitter Apps

I know it's only a tiny app, but I've had people ask me about how I created the Twitter apps to post the latest football scores (e.g. http://twitter.com/arsenal_scores) so having a bit of extra time (no pun intented) this week I decided to grab the latest copy and put in on Google Code. It serves another purpose as I hadn't backed it up, so now it's in source control and I can point people to it who are interested.

Tuck in - all 3kb of it.

http://code.google.com/p/latestscorestwitter/

Tuck in - all 3kb of it.

http://code.google.com/p/latestscorestwitter/

Tuesday, April 29, 2008

Databse Migrations using Rake without Rails

Database migrations can be a pain, it is for me anyway when I have to fire up an SQL window and execute commands (I'm lazy). Having used Ruby on Rails for a previous project I came across the command 'rake db:migrate' and loved how the creating of tables, columns and altering of those were reduced to Ruby code which is much easier to read and execute. I wanted to find a way to use that command on a project that wasn't using Rails. I found an excellent post on the subject, but I tweaked it slightly.

Monday, April 28, 2008

Open Source Call Control and Text to Speech Makes CallFlow Release

It's an exciting time at work, we've 'officially' released Aloha, our Session Initiation Protocol (SIP) Application Server as an open source project. We also released text to speech as a feature of CallFlow into our sandbox environment. Why do I think this is exciting? Well, several months ago I blogged about 'is it good enough to consume open source without contributing?', and I think this shows where we stand on that discussion.

Now, SIP servers may not mean a lot to many people, but it really should be a comodoity piece of software and this goes back to a blog I mentioned in the link above from JP, Build vs Buy vs Open Source. I think it's great to share so here is out effort, I don't know if it will get any uptake, but it'll be fun finding out.

In other news, there was some cool new features added to CallFlow during the Web21C release today. It's been pretty fun working on such a novel voice application with text to speech the obvious highlight. In fact, I wrote about it on my work blog.

Finally, I've been putting together a paper based on experiences developing Aloha with Rags and Fab. If you're interested, you can read the submission.

Now, SIP servers may not mean a lot to many people, but it really should be a comodoity piece of software and this goes back to a blog I mentioned in the link above from JP, Build vs Buy vs Open Source. I think it's great to share so here is out effort, I don't know if it will get any uptake, but it'll be fun finding out.

In other news, there was some cool new features added to CallFlow during the Web21C release today. It's been pretty fun working on such a novel voice application with text to speech the obvious highlight. In fact, I wrote about it on my work blog.

Finally, I've been putting together a paper based on experiences developing Aloha with Rags and Fab. If you're interested, you can read the submission.

Monday, April 07, 2008

It's a Mac Mini Adventure

It certainly has been a mini adventure, but one that spans several years in a quest for home entertainment. I think I may even be finally happy with that. With my new home I've been able to do some things I've always wanted to do. Since I left school I started to build my collection of stereos, TVs and other goodies. Recently I added two pairs of Mordaunt Short Genies with a matching centre to add to my 32" Samsung LCD TV.

I had a dilemma where I'd moved my PC away from close proximity to the TV which I was using to view videos with the VGA port on my TV and amp for my MP3s from the PC.

I had tried streaming to my xBox 360 using the Media Centre Extender, and although this works quite nicely for audio it can't cope with DivX movies even though the xBox has been given DivX support. There were a few products on the market which might have done the job, but then I thought "what is the most configurable, most likely to work how I want it work solution?" - the answer. A computer.

It needed to be small, be able to handle videos and music which all computers can. Be able to support DivX and other formats and ideally have a Media Centre like application with remote. The Mac Mini was my answer to that question.

Having a computer as a media centre is both a great thing and a bad thing. It's great as it's flexible but it's bad because it needs input through keyboard and mouse. There may be a way to disable this from the Mac I'm unaware of, but I added a wireless keyboard and mouse that works really nicely. The mouse even works on the material of my sofa.

See, for the smallness of the remote, the small keyboard and mouse are more powerful and easily stashed under the sofa.

All in all, I'm very happy with the purchase, a small media centre hooked up to my TV and amp for £500 that can also be used as a full on computer from the sofa. Throw in a 750GB external hard disc and I'm laughing.

Thursday, April 03, 2008

URI as Contract

When working on Mojo, one of the first things we did was to come to an agreement on the API that the application would use. I think we went in this direction early as we had always intended for the Mojo to be used as an 'widget' platform to access the Web21C SDK Services. I think it was a great move, and I'd certainly recommend this, even if you don't intend your web app to have an API.

With modern REST based web apps, we tend to think about the URI a lot more along with what to POST or GET to that URI. There are no real rules, and as such - toolkits, to help the developer with building REST-friendly URIs, it's more of a mind set. I won't repeat those rules here, but it essentially boils down to cool URIs and being mindful of resources.

The part where can come stuck is thinking in terms of actions or methods, it's something we all have to get used to, but I truely believe that if you invest a little time to agree URI as contract, it'll save headaches later on.

With modern REST based web apps, we tend to think about the URI a lot more along with what to POST or GET to that URI. There are no real rules, and as such - toolkits, to help the developer with building REST-friendly URIs, it's more of a mind set. I won't repeat those rules here, but it essentially boils down to cool URIs and being mindful of resources.

The part where can come stuck is thinking in terms of actions or methods, it's something we all have to get used to, but I truely believe that if you invest a little time to agree URI as contract, it'll save headaches later on.

Tuesday, April 01, 2008

Enterprise computing for non-geeks

Hello, and welcome to your new laptop from your employer, Enterprise X. We know that you're old laptop didn't need replacing, but this way we'll be able to ensure that you're up to date with all of our corporate policies and the applications that support them.

Firstly, you'll notice that although you have a 'mobile' computer, if you do take it out of the office you'll have to use your secure token to VPN (or dial-in) to use the internet. It's a big bad world out there, and we like to look after you.

You'll also notice that we've kindly given you 'admin' access, although you have to request this through our control panel and explain why you need it. Although your new laptop is capable of using the latest 'mainstream' applications, we've decided to give you the old versions, after all, that's what you're familiar with.

We've also done some great work to enable automated syncronisation of your 'My Documents' folder to the tune of two whole gigabytes, how you'll use that, we'll never know! We also have this great way of saving email space, instead of giving you standardised email access through POP or IMAP, we've used a series of vendor products to safeguard your email by only showing you the subject and the sentence or two until you really need it. Neat huh? We've recently increased the email storage size to 40MB to take advantage of this space saving. Who really needs 6GB indexed and search-able web mail anyway?

Finally, we've added the ultra secure 802.1X network authentication to your offices, but don't worry, as you're using approved hardware this will all be taken care of...

*stops*

*installs ubuntu*

Firstly, you'll notice that although you have a 'mobile' computer, if you do take it out of the office you'll have to use your secure token to VPN (or dial-in) to use the internet. It's a big bad world out there, and we like to look after you.

You'll also notice that we've kindly given you 'admin' access, although you have to request this through our control panel and explain why you need it. Although your new laptop is capable of using the latest 'mainstream' applications, we've decided to give you the old versions, after all, that's what you're familiar with.

We've also done some great work to enable automated syncronisation of your 'My Documents' folder to the tune of two whole gigabytes, how you'll use that, we'll never know! We also have this great way of saving email space, instead of giving you standardised email access through POP or IMAP, we've used a series of vendor products to safeguard your email by only showing you the subject and the sentence or two until you really need it. Neat huh? We've recently increased the email storage size to 40MB to take advantage of this space saving. Who really needs 6GB indexed and search-able web mail anyway?

Finally, we've added the ultra secure 802.1X network authentication to your offices, but don't worry, as you're using approved hardware this will all be taken care of...

*stops*

*installs ubuntu*

Friday, March 21, 2008

FOAF me up!

After reading this blog about adding Friend of a Friend (FOAF) to a web page, and I did this but I wasn't happy with the result. I then found the FOAF project page and used the FOAF-a-matic to create an RDF that's now embedded ins this blog. Yes, I supposed to publish a file called foaf.rdf but you can't have it all.

If you have FOAF, let me know - and I might add you as a friend ;)

- edited back to the original - thanks Tom

If you have FOAF, let me know - and I might add you as a friend ;)

- edited back to the original - thanks Tom

Test and Test Again

There has been some discussion of the application of agile principles including test driven development in the office recently. Having been working on a highly concurrent, scalable SIP application server my feet are firmly in the test first, test often camp.

Our testing strategy includes:

* unit testing, usually using mocks and dependency injection

* acceptance testing, using FitNesse

* robustness testing, using custom scenario handlers

* performance testing

These practices are dependent on the type of project, but we've found that although unit testing is done before the functional code is written, the other tests are usually written afterwards. I like to think that acceptance tests demonstrate completed user stories and robustness tests exercise common scenarios, concurrently.

A robust application comes with time, but through concurrent testing, issues can be drawn out earlier and the confidence you have in your application grows.

Our testing strategy includes:

* unit testing, usually using mocks and dependency injection

* acceptance testing, using FitNesse

* robustness testing, using custom scenario handlers

* performance testing

These practices are dependent on the type of project, but we've found that although unit testing is done before the functional code is written, the other tests are usually written afterwards. I like to think that acceptance tests demonstrate completed user stories and robustness tests exercise common scenarios, concurrently.

A robust application comes with time, but through concurrent testing, issues can be drawn out earlier and the confidence you have in your application grows.

Labels:

Agile,

concurrency,

fitnesse,

gile,

mocks,

robustness,

software engineering,

TDD,

Testing

Saturday, March 01, 2008

More Thoughts on Future of TV and Movies

I've read another great article on 'Hollywood vs the internet' thanks to the excellent newspapper, The Economist.

The article discusses how the Hollywood companies have missed out on using the web to leverage distribution of their media. It cites some companies which have spouted up offering films at reasonable prices is a range of formats, awesome! Thing is, these sites are illegal. Bummer. It's not for want of trying to get some stuff out there, there are some companies offering limited titles, but no one company seems fully driven to use the web yet.

I wanted to write something about this, but it wasn't until I read San1t1's excellent post on ownership and 20th century mass media that my thoughts stirred once again.

If we ignore worrying stories about ISP bloodbaths about broadband providers not being able to handle the web as a platform for one moment... I really don't want to buy another CD or DVD disc again. What I would like is purchase rights (paid or free - free is the best price) to view/listen content where ever and when-ever the hell I want!

Would I pay £10 for an 'on-demand' movie that I could watch as many times as I wanted, and could watch on my cable box, laptop or iPod? Probably yes. Would I pay that for being able to listen to my music through my cable box, through a web site or my ipod? Probably yes.

As an interesting aside, San1t1 also published another post along similar lines.

Where does this leave us all? While both Hollywood and the music industry innovate on what they think their customers need, I for one certainly continue to push for zero touch, high-definition, highly accessible content, music, films and TV.